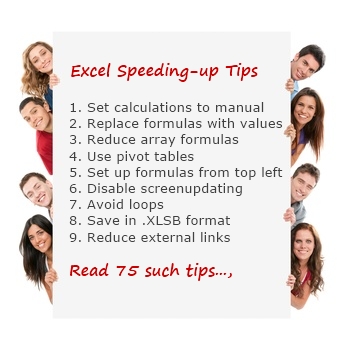

As part of our Speedy Spreadsheet Week, I have asked you to share your favorite tips & techniques for speeding up Excel. And what-a-mind-blowing response you gave. 75 of you responded with lots of valuable tips & ideas to speed-up Excel formulas, VBA & Everything else.

How to read this post?

Since this is a very large article, I suggest reading few tips at a time & practicing them. Consider bookmarking this page so that you can refer to these wonderful ideas when you are wrestling with a sluggish workbook.

Thanks to all the contributors

Many thanks to everyone who shared their tips & ideas with us. If you like the tips, please say thanks to the contributor.

Please note that I am not able to share some of the files you emailed as they contained personal / sensitive data.

Read Excel Speeding-up tips by area

This page is broken in to 3 parts, click on any link to access those tips.

Formula Speeding-up Tips

VBA / Macros Optimization Tips

Everything Else

Share your tips

Formula Speeding-up Tips

I use Formula-Calculation Options-Manual to disable calculations when setting up complex inter-relating formula pages. This stops Excel from churning through calculations every time I change a cell, saving my time. I just hit F9 to recalculate when I want to see the results.

I use

Application.ScreenUpdating = False and

Application.Calculation = xlManual

to speed up macros, and

Application.StatusBar = LoopNum

so I can see the status of my macro and estimate how long there is left to calculate. Don’t forget to switch these back at the end of the macro!

When I have complex formulas with results that won’t change, I hard-code these to save calculation time, but I keep the formula only in the first cell, or pasted in a comment.

Hi Chandoo,

In spreadsheets that have vlookups, if the source file is not going to change; I have realized that it is better to paste-special the vlookup values. This is because even a couple of vlookup slows down the file massively on account of recalculating of values.

Another step I take (this depends on the criticality of data and other factors) is to set the auto-save function in excel to an infrequent duration.

Adi

Replace sum products with count ifs or sum ifs where possible – they calculate a million times faster!!

Avoid large number of SUMIFS, instead, aggregate data into a PivotTalble, then use the Index(Match) combo to locate the sums.

I have dramatically sped up large worksheets doing this.

1. Change Calculation to Manual mode. Calculate manually only when required.

2. Delete all name ranges, unused area, unnecessary formatting.

I think some of the more basic, but highly effective tips to speed up larger workbooks are:

1.) Avoid array formulae, where possible. Everyone knows there are a million ways to skin the proverbial Excel cat. Find alternatives to array.

2.) Adjust the calculation options, if necessary. Frequent calculations = sluggishness. A word of warning, though – people need to know if calculations aren’t automatic, or it can/will cause confusion.

3.) If all else fails, copy and paste as value. If the recipients of your data don’t need the flexibility to enter new data and update values with calculations, take formulae out of the equation (no pun intended) all together.

I replaced all my SUMPRODUCT formulas with SUMIFS and calculation time went from about 50sec to instantaneous. My system is a AMD 6 processor with 8gig memory, Excel 2007.

Stay away from array formulas (unless to have calculations on Manual).

A simple, little tip/trick for speeding up calculating:

Sometimes in a workbook you have so many formulas that for effective work you have no other choice but to turn off the auto-calculating. Still you work on your workbook, writing new formulas, there is no problem if you just wrote one formula in one cell – it can be calculated by just F2-Enter combo. Problem is when you created a new formula for a whole column – you don’t have to calculate whole workbook now or “F2-enter” every cell – just select the column you want to calculate, Ctrl+H and change “=” for … “=”. It’s a known trick, still some people may not know it yet. Cheers.

PS. I don’t work on English version of Excel so my translations may not be accurate.

I use templates with formulas in them that I add data to every month, and once I paste the next months data I copy down the formulas recalculate and then copy and paste the formulas except for one line of formulas for next month. In this way my spreadsheet of 200-300k & growing lines doesnt have to recalculate all the rows everytime.

I set the Automatic Calculation option to manual make any changes in Excel and then hit F9 to calculate as and when required or set back to Automatic once I have completed any large or slow spreadsheets. Save me so much time and frustration. I would love to hear any other tips on speeding up spreadsheets.

Cut down on the use of Array formulas – particularly if they are nested in IF statements.

Speed tips for formulae

1 As you type formula after the =sign, when the prompt appears select the down arrow key and press Tab key so that the function is inserted. Then press the fx in the formula bar to bring up the prompts and start filling the blanks

2 Use f4 key for referencing

3 When using the Rept formula use “l” which is L in small caps and then type the number of times you want to rept.

4 can combine 2 rept commands by shrinking the column width than doing long formulae

5 Rept formula is a powerful tool and can used to show both negative and positive values For e.g. profit and loss A/C can be shown in rept formula

another use of rept formula is to use it for confidence interval with mean in the middle.

6 To make Vlook up to look up values in the right side: copy and paste the columns next to each other and perform vlook up. it is easy and there is no need for another formula For eg;Name and Phone number in two columns

Vlook up will look up the name and will return the phone number. If we have phone number and want the name then we need to write a match and index, Instead if you copy name and phone next to each other then for the phone number vlook up will return the name. That is easy.

I am feeling sleepy after this. More later

Nice subject!

My 2 cts.

A. Formulas

1. If you need to turn off recalc, it is time for a redesign.

2. Avoid array formulas (this includes sumproduct), instead use helper columns which have intermediate results. Easier to debug and very often much faster

3. Avoid VLOOKUP, especially on large tables, instead, use INDEX combined with MATCH, where you use a helper column for the match so you only ask Excel to search your table once for each row instead of once for each column in a row.

4. Do your summarizing with Pivot tables instead of functions

5. Be prudent with range names. Use them sparingly and limit them to constants. Formulas with range names are harder to audit because of the extra layer between your formula and the grid.

6. Visit www.decisionmodels.com, the site contains a wealth of information on recalculation in Excel.

I work with files that use a lot of data tables. In order to avoid excessive delays I will turn off the automatic setting under calculation options and select automatic except for data tables. In addition, I have noticed that excessive conditional formatting can really bog down the spreadsheet as well. Thus, I try to limit and consolidate formatting needs where I can.

Use as many array formulas as possible on the staging worksheet. That way the Excel or UDF functions are called as few times as possible.

The way I speed up my workbooks is by pasting values (instead of keeping the formulas) once the data is no longer going to be updated. For example, I have files that track activity that has happened each quarter. The sheets often have 35,000 rows of data and formulas in each of the 10 columns (for each row). As soon as the quarter is over, I paste the values over the formulas since things won’t be changing any longer.

Perform paste down macros for all calculations. These use dynamic named ranges to select a row of formulas, then paste them in against a table of data. This way you can calculate formulas against thousands of rows and then copy-paste special with values. Removing live formulas seriously reduces calculations times for workbooks with 1K+ rows of data.

Perform Sorts and then use range formula (OFFSET, INDEX) to select a subset of rows, rather than using conditional formula on whole columns. SUMIF, COUNTIF, array formulas etc are very slow on big columns of data. Sorting can filter a table to records that share the same attribute and range formulas can pick up row numbers to only select a sorted block of values.

Keep use of array formulas to a minimum. Keep calculations running sequentially from top left to bottom right when possible. Break up larger internal formula calculations into smaller bites (more columns etc). Look for formula parts shared by formulas. Use offset to keep lookup formulae to the minimum required ranges. Use built-in formulas whenever possible.

I work with large workbooks with extensive formula throughout. I used to use VBA to paste in formulas then I would value them out, but my clients couldn’t easily modify the formulas if they desired a change. Since then I would place a formula row at the top of the data and use VBA to copy that row and paste formulas below, calculate then value them out. The client can then modify the initial row of data to suit their needs. This greatly improved save and load times.

When I have thousands of rows of equations (all the same), I convert all but the top row to values. Then I create a macro that spreads the equations from the top row down to all the necessary rows and makes them values again. Saves a lot of excel recalculating.

use iferror instead of if(iserror(…

I have Excel 2003 files of 45 Mb plus that track daily shift performance that have lots of vlookups, conditional formats, data validation, event triggered VBA. To speed things up I cheat! The historic data is copy-special pasted over itself to turn it into values only – so when auto updates happen they only process the “current data”.

One thing I do where there are multiple columns with formulas is this:

Once my formulas have all calculated and I know the the results won’t change, I copy the formula and put it at the top of my spreadsheet. Then put a red top & bottom border around the formula so I can easily find them.

I then copy the data set full of formulas and re-paste it on itself (keyboard shortcut – copy/file/paste special/values). The spreadsheet calculates much faster.

When I need to update the data I just copy the row of formulas and paste them over the data rows.

save as .xlsb to speed up opening time/decrease file size. also changed =if(iserror) to =iferror to speed up processing. changed from vlookups to pivot table/=getpivotdata format to speed up processing

a) Delete/or clear contents on all blank cells under & to the right of my data. b) On old inherited files, clear out old range names. c) use specific cell references for vlookups (rather than entire columns) d) remove as many calc’s as possible (copy-paste-special values) e) keep pivot tables in separate file from data file f)Stopped using arrays & sumproduct() completely 🙁 g) now considering upgrading to 64 bit OS & 64 bit MSOffice 2010 (currently using 32 bit MSExcel 2010 on XP)

1. Define name of ranges and Use it in the Formula if data is flowing from database.

2. Remove the unused name or name resulted any error or scope outside the workbook.( Formulas—>name manager)

Reduce Images / Shapes that reduces the performance

Linking all my dashboards with pivot tables and queries for to update complex data with one click.

My array formulas used to reference an entire row or column (e.g. A:A or 1:1), and I’m pretty sure that slowed down the sheet. I shrunk the reference to go through, say, row 5000, and it appears to have helped the problem.

No doubt excel is a powerful analytical tool but most of the people do not plan before designing there spreadsheet. One should plan the Start and End in mind, and the assumption that the spreadsheet will never be used again should kept out of mind. Perhaps this is might be the number one rule. Spreadsheets are about giving correct information to the user, not possible erroneous information that looks good.

Excel Best Practices & Design

Formatting

Your spreadsheet should be easy to read and follow. Most of the users spend about 30%, or more, of their time formatting their spreadsheets. Use the cell format of Text if really necessary. Any cell containing a formula, that is referencing a Text formatted cell, will also become formatted as Text. This format is not usually needed but very much used. If you apply a number format to specific cells avoid applying the format to the entire column. If you do, Excel will assume you are using these cells.

Layout

Try and ensure all related raw data is on one Worksheet and in one workbook. When putting in headings bold the font. This will help Excel recognize them as headings when you use one of its functions. When putting data into the data area of your spreadsheet try to avoid blank rows and columns. This is because a lot of Excels built-in features will assume a blank row or column is the end of your data. Use real dates for headings and format them appropriately. If you want the names of the months as headings type them in as 1/1/2001, 1/2/2001, 1/3/2001 etc then format them as “mmmm”. This is a very simple procedure that is all too often overlooked by many. Don’t put in one cell what could go in more than one cell, i.e. the names of 100 people to put into your spreadsheet, don’t put their full name in one cell. Instead, put the First name in one cell and their surname in the next cell to the right.

Formulas

This is the biggest part of any spreadsheet! Without them you really only have a document. Excel has over 300 built in Functions (with all add-ins installed), but chances are you will only use a handful of these.

The usual practice in regards to formulae in Excel is the referencing of entire columns, this is a big mistake! This forces Excel to look through potentially millions, of cells which it need not be concerned with at all. One of the very best ways to overcome this is to familiarize you with the use of dynamic named ranges.

Speeding up Re-calculations

A common problem with poorly designed spreadsheets is that they become painfully slow in recalculating. Some people will suggest that a solution to this problem is putting a calculation into Manual via Tools>Options>Calculations. A spreadsheet is all about formulas and calculations and the results that they produce. If you are running a spreadsheet in manual calculation mode, sooner or later you will read some information off your spreadsheet which will not have been updated, this means using F9 on regular intervals, which can cause bad results, because Pressing F9 can be overlooked.

Arrays, Sumproduct (used for multiple condition, summing or counting), UDFs, Volatile Functions and Lookup functions, can slow down the recalculations of spreadsheet.

Array Formulas

The biggest problem with array formulas is that they look efficient. An Array must loop through each and every cell they reference (one at a time) and check them off against a criteria. Arrays are best suited to being used on single cells or referencing only small ranges. A possible alternative are the Database functions. Another very good alternative which is mostly overlooked is the Pivot tables. Pivot Tables can be frightening at the first site but it is the most powerful feature of Excel.

UDF (User Defined Functions)

These are written in VBA and can be used the same way as built in functions can be, but unfortunately, no matter how good the UDF is written the, it will perform at the same speed as one of Excel’s built-in functions, even if it would be necessary to use several nested functions to get the same result. UDFs should only be used if an Excel function is not available

Volatile Functions.

Volatile functions are simple functions that will recalculate each time a change of data occurs in any cell on any worksheet. Most functions which are non-Volatile will only recalculate if a cell that they are referencing has changed. Some of the volatile functions are NOW(), TODAY(), OFFSET(), CELL(),INDIRECT(), ROWS(), COLUMNS() . If you are using the result of these functions frequently throughout your spreadsheet, avoid nesting these functions within other functions to get the desired result especially in array formulas and UDF’s. Simply use the volatile function into a single cell on your spreadsheet and reference that cell from within other functions.

Lookup Functions

The Famous Vlookup(). Excel is very rich in lookup functions. These functions can be used to extract data from just about any table of data. The biggest mistake made by most, is the forcing of Excel to look in thousands, if not millions of cells superfluously. The other mistake is that the lookup functions are told to find an exact match. This means that Excel will need to check all cells until it finds an exact match. If possible, always use True for VLOOKUP and HLOOKUP. So, whenever possible, sort your data appropriately. Sorting the lookup columns is the single best way to speed up lookup functions. Another Bad practice is the double use of the Lookup Function nested within one of Excels Information functions. Like =if(isna(vlookup(cell ref,Range,2,false))=true, “Please check”, (vlookup(cell ref,Range,2,false)))

This is used to prevent the #N/A error from displaying when no match can be found. This forces Excel to use the VLOOKUP twice. As you can imagine, this doubles the number of Lookup functions used. The best approach is to live with the #N/A, or hide it via CONDITIONAL FORMATTING.

LAST WORDS

Lean to us e database functions. They are very easy to use and are often much faster than their Lookup & Reference counterpart.

Microsoft Tips

Organize your worksheets vertically. Use only one or two screens of columns, but as many rows as possible. A strict vertical scheme promotes a clearer flow of calculation.

When possible, a formula should refer only to the cells above it. As a result, your calculations should proceed strictly downward, from raw data at the top to final calculations at the bottom.

If your formulas require a large amount of raw data, you might want to move the data to a separate worksheet and link the data to the sheet containing the formulas.

Formulas should be as simple as possible to prevent any unnecessary calculations. If you use constants in a formula, calculate the constants before entering them into the formula, rather than having Microsoft Excel calculate them during each recalculation cycle.

Reduce, or eliminate, the use of data tables in your spreadsheet or set data table calculation to manual.

If you only need a few cells to be recalculated, replace the equal signs (=) of the cells you want to be recalculated. This is only an improvement if you are calculating a very small percentage of the formulas on your worksheet.

By changing formulas to manual from automatic

If my model has lot of formulas in the data sheets and working on the summary tab – then I will Keep my formula calculation option as “Manual”.

If you are doing calculation in one sheet Pls use Shift+F9 (to get refresh the formula in the active sheet).

F9 – to refresh the complete workbook.

1. arrange source data before linking to dashboard / report with macros and other aggregate functions

2. separate results into several charts & link list boxes to just one calculation

3. avoid volatile and array functions

VBA / Macros Optimization Tips

First. I find your site awesome.

Well I speedup my VBA code by setting

Application.ScreenUpdating to false

Application.EnableEvents to false and

Application.Calculation to xlCalculateManual

and then setting those values back to whatever they were before I made the changes. I do EnableEvents when I use a Event Driven actions and I know that I do not need them during those calculation/operations.

Two approaches.

a) Profile both worksheet calculations, and if necessary VBA code using the profilers downloadable here to identify and report on slower performing calculations and code.

http://ramblings.mcpher.com/Home/excelquirks/optimizationlink

b) in VBA, always abstract data from the worksheet and work on the abstracted object model.

http://ramblings.mcpher.com/Home/excelquirks/classeslink/data-manipulation-classes/howtocdataset

We design macros which we run across the many worksheets. If formulas are generated, we do final macros to save the formula results as numbers. This retains our worksheets as light.

Regards

David

1. Disable screen updating in VBA.

2. Set calculation to Manual, use Shift-F9 to calculate each sheet as needed. Is a pain, but I have found it is a major time saver on a couple of my largest files.

Recently, I’ve been busy with a project to emulate software for seeking secret messages in classical texts using EXCEL. I need to write hundreds of thousands of single letters each in a cell, and I’ve found it faster to operate internally VBA and finally write as a block in a declared range, rather than doing it via a loop writing individually each cell.

I haven’t measured times, but I would venture it’s a lot faster.

Not sure if this is what you are looking for – but here is what I do to speed up my excel workbook –

Along with all standard keyboard shortcuts – I have been creating a lot of Macros. I ran out of shortcut keys I can use with Ctrl – so now started using Ctrl+Shift to create my own shortcuts. (May be I don’t know any existing shortcut- and tried to reinvent the wheel for some of them)

I have Macros for – Green/Yellow/Pink Highlight – Merge + Wrap Text – Enter TB Link (Entering specific formula to cell) – Single Underline Cell

Just thought to share this as you asked for – considering all the entries I have seen from others on your website, I am just a newbie in the Excel World.

VBA: One powerful one is to use “Destination:” in your copying and pasting which bypasses the clipboard. Or if only values are wanted simply assign values.

So instead of:

Sheet1.Range(“A1:A100”).Copy

Sheet2.Range(“B1”).pasteSpecial

Application.CutCopyMode=False

‘Use:

Sheet1.Range(“A1:A100”).Copy Destination:=Sheet2.Range(“B1”)

If values only required ditch copy and simply assign values from one place to another:

Sheet2.Range(“B1:B200”).Value= Sheet1.Range(“A1:A100”).Value

Avoid loops like the plague while writing macros, unless absolutely necessary.

A tip which is well documented when searching for ways to improve performance when using VBA/Macros is to turn off screen updating, calculations and setting PivotTables to manual update.

Most of the procedures I create in VBA start with:

With Application

.ScreenUpdating = False

.Calculation = xlCalculationManual

End With

And will end with the following statements:

With Application

.ScreenUpdating = True

.Calculation = xlCalculationAutomatic

End With

If PivotTables are involved then I include the following in procedure:

With PivotTable

.ManualUpdate = True

End With

And will end with the following statement

With PivotTable

.ManualUpdate = False

End With

Planning carefully before coding.

Passing the entire SQL query into the code, leaving no connection on sheets.

This is a very general tip, but when using VBA — AVOID LOOPS!

Use the “Find” and “Search” methods rather than looping through cells. Loops work quick when you are using less than 100, or sometimes less than 1000 cells — but start adding more and you will be in for a waiting game.

If you have VBA code that writes updates to the screen, this slows down the code (I/O is slow). If you have a lot of screen writes, the code can be painfully slow. You can turn off screen writes while your code is running and then do one massive screen write at the end of the macro. Up at the beginning of your code, maybe just after you declare variables, add the line “Application.ScreenUpdating = False”. At the end of your code, you need to turn screen writes back on so add the line “Application.ScreenUpdating = True” just before you exit the macro.

If you have a load of screen writes, the speed difference can be dramatic.

Hey Chandoo

VBA-speed

at the beginning of the macro

…

Application.ScreenUpdating = False

Application.Calculation = xlCalculationManual

at the end of the macro

….

Application.ScreenUpdating = True

Application.Calculation = xlCalculationAutomatic

Call Calculate

end sub

regards Stef@n

With Application.ScreenUpdating = False / True

With Application.Calculation = xl.CalculationManual

Using the statement with wherever is possible

and release memory when the objects variable are not used anymore

Everything Else

1. I list out the things required and will imagine the plan of my task.

2. I try to minimize the calculation for speedy calculation. So, I am trying to learn new formulas.

3. In each and every step, I consider about the others who use that excel. So that I can make the workbook user-friendly to others also.

I close MS Outlook when working on heavy files. Basically I exit all the programs that will eat into process speed. It helps to an extent.

Also, I try and minimize cross linking of files.

Power Pivot from Microsoft. This looks like it would solve the problem of large amounts of data.

http://office.microsoft.com/en-us/excel/powerpivot-for-microsoft-excel-2010-FX101961857.aspx

I wrote a blog article on my favorite tips here: http://www.plumsolutions.com.au/articles/excel-model-file-size-getting-out-hand

I haven’t done this myself, but a consultant we used sped up our dashboard by writing VBA code which “dumped” a lot of the back data after it was loaded. This greatly reduced the amount of data stored, thus reducing file size, thus sped up the dashboard.

1. Many database download from whatever system may include blank data occupying cells from the last row of data to the last possible row Excel can provide. So I would look at the data set and delete those rows (or columns, but I see more blank rows than blank columns).

2. Too many pivot tables: I’d ask the person who create multiple pivot table on the same workbook to see if there is a need to maintain those pivot tables. If the answer is

a) no need to maintain: I’d delete.

b) need to maintain but may not be in a pivot table. I’d convert pivot tables into just text/data (thereby removing the pivot function) table.

3. Try to reduce the number of worksheet. I found out that the size of a workbook (I can’t prove it but it’s my general observation) would expand if there are more worksheets.

Standardize, standardize, standardize! The more you are “boring” the quicker it will be to set up work with (and have others) use your formats!

Items to standardize:

– Font

– Color scheme

– Headers

-Colors for “input” (font color or fill color)

-tab color scheme (answers, data input, analytics)

– Color for “answer/solution”

– Lead with a recap page (easy and quick to find the solution)

-Configure to print (courtesy to others – if you need to print an answer – set up the parameters before sharing)

by using single sheet in work book & using alt,clt short key

Oddly enough, the best thing I have found to speed up Excel is to completely disconnect internet access for my computer. I don’t know why, but Excel is unbelievably slow when I am otherwise online, and speeds up immediately when I disconnect. I’d love it if someone could help me understand why this is the case.

Even though I’ve been using Excel for quite some time, I learn and love your site. You teach me the impossible. The simplest way I at least save data space is to save it in .xlsb format. I read somewhere that even a .xlsx is basically a number of zipped or compressed files that need to open and save. Not sure about that, but know the binary file is much smaller in size than the others. Not sure if macro enabled workbooks will save as binary. Thanks. Always look forward to what the next email will hold…scary sometimes. -Jim

I’m not an expert but try to keep the dashboard as much simple as possible.

I use access to have the main table and from that table we create different dashboards and reports and pivots to analyze data

I break any links to the spreadsheet that I am not using.

Try to avoid adding formatting over an area larger than you need, I’ve found that if you format a whole row, column or worksheet it can slow the workbook down and create large files

Dumping out as much unnecessary data as I possibly can, converting formulas to values whenever possible and making sure the empty space on each sheet is empty.

Also I’m using lots of pivot tables on my spreadsheets so I’m trying to use as few pivot caches as possible and trying to use external data sources for my PTs whenever possible (or deleting the original data once the PT is created).

Most of the time, to increase speed & size. what I do is

1) simply copy the used data cells to a new sheet, (by selecting from A1 to the end of the data cell),

2) if there are too much of borders decorated around more cells, then try replacing these borders with minimum dotted lines (just to highlight the difference)

3) Avoid using too many fonts in the sheet

4) Cut short complicated formulas or multiple linked formulas,

I have Liked based models. I try to make my links as small as possible. I try to put all the sheets in one file and interlink them so that they take less storage space and react much speedy in working.

Create a view in SQL and set a scheduled task to run to generate the view before you update the dashboard.

Do we get some SWAG for sharing??

Here are some tips I’ve collected, although they repeat some points they provide different viewpoints:

10 WAYS TO IMPROVE EXCEL PERFORMANCE

http://www.techrepublic.com/blog/10things/10-ways-to-improve-excel-performance/2842?tag=nl.e072

EXCEL 2010 PERFORMANCE: IMPROVING CALCULATION PERFORMANCE

http://msdn.microsoft.com/library/ff700515.aspx

CLEAN UP YOUR MACRO LIST

http://excelribbon.tips.net/T008037_Clean_Up_Your_Macro_List.html

OPTIMIZE MACROS – VBA CODE CLEANER

http://www.appspro.com/Utilities/CodeCleaner.htm

I recently happened to work on a report which has 1.5 lac rows of data in 16 columns. The requirement was that in the main report as soon as a change is made say for a dept or month the numbers should accordingly change. I tried most known formulas like Sumifs, Sumproduct, Vlookup, Index and Match. How ever the calculation time these formulae took was much more compared to one formulae that I felt was the fastest in terms of calculation. That was Getpivot data.

I basically used the “show all report filter” option in the Pivot options to generate summary data in around 500 tabs using the my base data. Then I used get pivot data formula in my report file. Though the file size was a bit huge still my formula get calculated almost instantaneously.

Also one strange thing I noticed in one other file of mine was that when I press Ctrl+end the last cell it stopped was in some 2 lac row or something, how ever the data was only in some 10k rows. I used clear all option from the last cell from where I have data to the last cell it went when I pressed Ctrl+end .By doing this my file size came down from 12 MB to some 600kb.. 🙂

Hope this helps someone.

Hi,

Great topic (as usual).

One thing I like to do is minimize links between workbooks. Instead of using live links to import data I like to use import and export sheets. These are identical sheets on the origin workbook (for export) and the receiving workbook (for import). Values are calculated in the origin workbook and pasted to the receiver as values only.

This gets rid of messy links and keeps spreadsheets smaller and tidier.

One thing to be careful of is that if one changes the other has to change so they stay identical.

Thank you again for your excellent material.

That’s my problem too I would love to hear what others say. For

me closing other spread sheets and unnecessary opened tasks in your PC helps.

– remove external data links – better to import a large data table – or use an SQL statement if possible.

– especially don’t use INDIRECT to anything external

1) Limit color use in Excel

2) Hide gridlines (with “View Gridlines” function) rather than color the cells white

3) Create smart Vlookup formulas (Arrange data in the lookup tab so the range is as small as possible – 3 columns vs. 20 columns)

4) Link multiple tabs using the same data to one data tab. Ie.. Dates, headers, etc…the links will eliminate having to update each tab.

5) Extract only the needed data from the database (10 columns of needed data vs. 40 columns available data.

6) If the Database report does not allow the user to choose what is exported, export the data, organized the needed data into a consolidated area (rows x columns), Copy & paste into a new tab and delete the original tab. Many people leave the original data in the file, which slows down the file and adds to the file size.

1) Don’t create different pivots from same data. Copy the old and slice into the new one.

2) Go to special –> Check Last known cell and delete unnecessary data and formulae.

3) Set Calculation option to manual. Do all the dirty work and finally make it automatic and go for a coffee break 🙂

4) Use excel tables as far as possible.

To speed up workbooks, the reduction of formulas within the workbooks should be the main aim.

If the data is being pulled from an external database into the worksheet:

– Do not start calculations on that data in the workbook which could have been done before, e.g. using formulas to split up the dates like mm-dd-yyyy, weekdays a.s.o..

– Reduce the amount of sources if possible and combine the data in one sheet

– Use name ranges

– Split up the workbooks for different purposes (Dashboard for CEO, Dashboard for CFO a.s.o..).

– Try to use only one format for importing (I prefer *.csv or *.txt)

– If you connect your Workbook directly to a SQL database, make sure the connection is high-speed

If the modeling is too complex, think of using a a (semi-) professional data ETL tool in between or use the additional add-ons like PALO or Pentaho available as open source to rise the power of multidimensional databases for your BI-tools.

This can and will save time in calculating for the necessary functions of the workbooks. Stay with KISS (Keep it simple, stupid).

I use name range for multiple pivots, basically the offset function, this not only speeds up my calculation but also reduces the size of the workbook.

More on Excel Optimization & Speeding up:

Read these articles too,

- Optimization & Speeding-up Tips for Excel Formulas

- Charting & Formatting Tips to Optimize & Speed up Excel

- Optimization Tips & Techniques for Excel VBA & Macros

- Excel Optimization tips submitted by Experts

- Excel Optimization tips submitted by our readers

Want to become better in Excel? Join Chandoo.org courses

Excel SchoolLearn Excel from basics to advanced level. Create awesome reports, dashboards & workbooks. |

VBA ClassesLearn VBA & Macros step-by-step. Build complex workbooks, automate boring tasks and do awesome stuff. |

How do you speed-up Excel? Share your tips

Between these 75 ideas & and previously written articles, we have covered a lot of optimization & speeding-up techniques. What are your favorite methods? How do you optimize & deal with sluggish workbooks? Please share your ideas & tips with us using comments.

One Response to “How to compare two Excel sheets using VLOOKUP? [FREE Template]”

Maybe I missed it, but this method doesn't include data from James that isn't contained in Sara's data.

I added a new sheet, and named the ranges for Sara and James.

Maybe something like:

B2: =SORT(UNIQUE(VSTACK(SaraCust, JamesCust)))

C2: =XLOOKUP(B2#,SaraCust,SaraPaid,"Missing")

D2: =XLOOKUP(B2#,JamesCust, JamesPaid,"Missing")

E2: =IF(ISERROR(C2#+D2#),"Missing",IF(C2#=D2#,"Yes","No"))

Then we can still do similar conditional formatting. But this will pull in data missing from Sara's sheet as well.