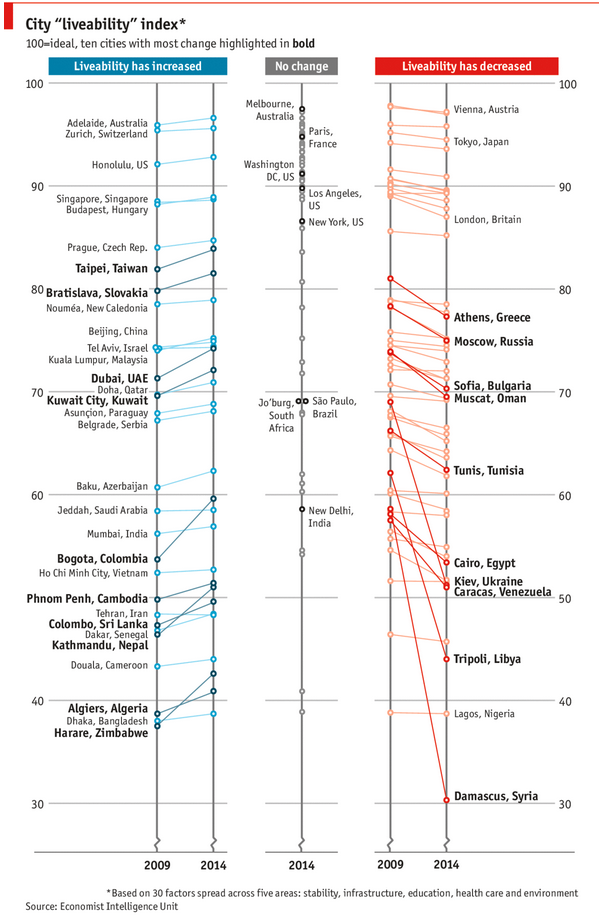

Recently, I saw this chart on Economist website.

It is trying to depict how various cities rank on livability index and how they compare to previous ranking (2014 vs 2009).

As you can see, this chart is not the best way to visualize “Best places to live”.

Few reasons why,

- The segregated views (blue, gray & red) make it hard to look for a specific city or region

- The zig-zag lines look good, but they are incredibly hard to understand

- Labels are all over the place, thus making data interpretation hard.

- Some points have no labels (or ambiguous labels) leading to further confusion.

After examining the chart long & hard, I got thinking.

Its no fun criticizing someones work. Creating a better chart from this data, now thats awesome.

So I went looking for the raw data behind this graphical mess. Turns out, Economist sells this data for a meager price of US $5,625.

Alas, I was saving my left kidney for something more prominent than a bunch of raw data in a workbook. May be if they had sparklines in the file…

So armed with the certainty that my kidney will stay with me, I now turned my attention to a similar data set.

I downloaded my website visitor city data for top 100 cities in September 2014 & September 2013 from Google Analytics.

And I could get it for exactly $0.00. Much better.

This data is similar to Economist data.

Chart visualizing top 100 cities

Here is a chart I prepared from this data.

This chart (well, a glorified table) not only allows for understanding all the data, but also lets you focus specific groups of cities (top % changes, new cities in the top 100, cities that dropped out etc.) with ease.

Download top 100 cities visualization – Excel workbook

Click here to download this workbook. Examine the formulas & formatting settings to understand how this is made.

How is this visualization made?

Here is a video explaining how the workbook is constructed. [see it on our YouTube channel]

The key techniques used in this workbook are,

- SUMIFS, INDEX + MATCH formulas for figuring out data

- Sorting data by a particular column

- Conditional formatting to show % change arrows

- Form controls for user interactivity

Since the process of creating this visualization is similar to some of the earlier discussed examples, I recommend you go thru below if you have difficulty understanding this workbook:

- Suicides vs. Murders – interactive Excel chart

- Gender Gap chart in Excel

- Visualizing world education rankings

- Analyzing survey results with panel charts

How would you visualize similar data?

Here is a fun thought experiment. How would you visualize such data? Please share your thoughts (or example workbooks) in the comments. I & rest of our readers are eager to learn from you.

6 Responses to “Make VBA String Comparisons Case In-sensitive [Quick Tip]”

Another way to test if Target.Value equal a string constant without regard to letter casing is to use the StrCmp function...

If StrComp("yes", Target.Value, vbTextCompare) = 0 Then

' Do something

End If

That's a cool way to compare. i just converted my values to strings and used the above code to compare. worked nicely

Thanks!

In case that option just needs to be used for a single comparison, you could use

If InStr(1, "yes", Target.Value, vbTextCompare) Then

'do something

End If

as well.

Nice tip, thanks! I never even thought to think there might be an easier way.

Regarding Chronology of VB in general, the Option Compare pragma appears at the very beginning of VB, way before classes and objects arrive (with VB6 - around 2000).

Today StrComp() and InStr() function offers a more local way to compare, fully object, thus more consistent with object programming (even if VB is still interpreted).

My only question here is : "what if you want to binary compare locally with re-entering functions or concurrency (with events) ?". This will lead to a real nightmare and probably a big nasty mess to debug.

By the way, congrats for you Millions/month visits 🙂

This is nice article.

I used these examples to help my understanding. Even Instr is similar to Find but it can be case sensitive and also case insensitive.

Hope the examples below help.

Public Sub CaseSensitive2()

If InStr(1, "Look in this string", "look", vbBinaryCompare) = 0 Then

MsgBox "woops, no match"

Else

MsgBox "at least one match"

End If

End Sub

Public Sub CaseSensitive()

If InStr("Look in this string", "look") = 0 Then

MsgBox "woops, no match"

Else

MsgBox "at least one match"

End If

End Sub

Public Sub NotCaseSensitive()

'doing alot of case insensitive searching and whatnot, you can put Option Compare Text

If InStr(1, "Look in this string", "look", vbTextCompare) = 0 Then

MsgBox "woops, no match"

Else

MsgBox "at least one match"

End If

End Sub