In How Many Links are Too Many Links, O’Reilly radar shows us this unfortunate bubble chart. (click on the image to see a bigger version)

In How Many Links are Too Many Links, O’Reilly radar shows us this unfortunate bubble chart. (click on the image to see a bigger version)

I say unfortunate for the lack of a better word without sounding harsh.

Just in case you are wondering what that chart is trying to tell (which is perfectly fine)

Nick Bilton, who constructed this chart, got curious and went to the top 98 websites in the world and found out how many links they have on their home page. Then he used charting tools like processing to create the bathing bubbles you are seeing aside.

The conclusion ?

Too many bubbles can drown you. And also, top web sites have lots and lots of links on their home pages.

But seriously, apart from looking really pretty, does this chart actually provide that conclusion?

I think Nick and the O’Reilly radar team could have much better with a simpler and fortunate chart selection.

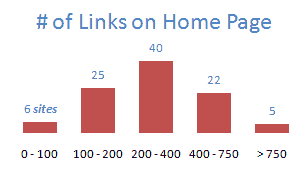

A histogram of # of links on popular home pages

like the one below would have been very easy to read and get the point.

I showed some dummy data in the histogram, but when you create 2 histograms, one for popular sites (ranked below 5000) and one for not-so-popular sites (>5000) you can easily make the point and use the bubbles for a warm bath.

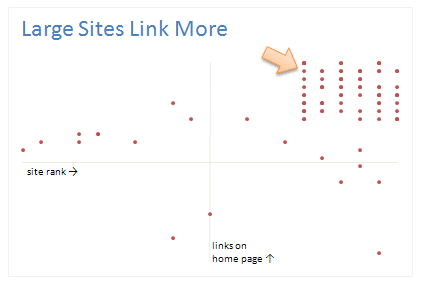

A better alternative is to show a scatter chart

with site rank on one axis and # of links on home page on another axis, that way a conclusion like Top Sites Links More can be easily established.

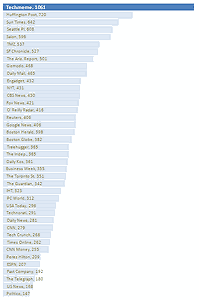

Even a bar chart with number of links on each home page

could have been better than umpteen bubbles

You could easily add a bar with “avg. number of links on non-popular sites” to contrast the linking behaviour of large sites wrt small sites.

But alas, we are treated to an unfortunate bubble chart that does nothing but look pretty (and ridiculously large)

What do you think ? How many bubbles are too many ?

Recommended Reading on Bubble Charts: Travel Site Search Patterns in Bubbles, Good Bubble Chart about the Bust. Olympic Medals per Country

14 Responses to “Group Smaller Slices in Pie Charts to Improve Readability”

I think the virtue of pie charts is precisely that they are difficult to decode. In many contexts, you have to release information but you don't want the relationship between values to jump at your reader. That's when pie charts are most useful.

[...] link Leave a Reply [...]

Chandoo,

millions of ants cannot be mistaken.....There should be a reason why everybody continues using Pie charts, despite what gurus like you or Jon and others say.

one reason could be because we are just used to, so that's what we need to change, the "comfort zone"...

i absolutely agree, since I've been "converted", I just find out that bar charts are clearer, and nicer to the view...

Regards,

Martin

[...] says we can Group Smaller Slices in Pie Charts to Improve Readability. Such a pie has too many labels to fit into a tight space, so you need ro move the labels around [...]

Chandoo -

You ask "Can I use an alternative to pie chart?"

I answer in You Say “Pie”, I Say “Bar”.

This visualization was created because it was easy to print before computers. In this day and age, it should not exist.

I think the 100% Bar Chart is just as useless/unreadable as Pies - we should rename them something like Mama's Strudel Charts - how big a slice would you like, Dear?

My money's with Jon on this topic.

The primary function of any pie chart with more than 2 or 3 data points is to obfuscate. But maybe that is the main purpose, as @Jerome suggests...

@Jerome.. Good point. Also sometimes, there is just no relationship at all.

@Martin... Organized religion is finding it tough to get converts even after 2000+ years of struggle. Jon, Stephen, countless others (and me) are a small army, it would take atleast 5000 more years before pie charts vanish... patience and good to have you here 🙂

@Jon .. very well done sir, very well done.

good points every one...

I've got to throw my vote into Jon's camp (which is also Stephen Few's camp) -- bars just tend to work better. One observation about when we say "what people are used to." There are two distinct groups here (depending on the situation, a person can fall in either one): the person who *creates* the chart and the person who *consumes* the chart. Granted, the consumers are "used to" pie charts. But, it's not like a bar chart is something they would struggle to understand or that would require explanation (like sparklines and bullet graphs). Chart consumers are "used to" consuming whatever is put in front of them. Chart creators, on the other hand, may be "used to" creating pie charts, but that isn't an excuse for them to continue to do so -- many people are used to driving without a seatbelt, leaving lights on in their house needlessly, and forwarding not-all-that-funny anecdotes via email. That doesn't mean the practice shouldn't be discouraged!

[...] example that Chandoo used recently is counting uses of words. Clearly, there are other meanings of “bar” (take bar mitzvah or bar none, for [...]

[…] Grouping smaller slices in pie chart […]

Good article. Is it possible to do that with line charts?

Hi,

Is this available in excel 2013?