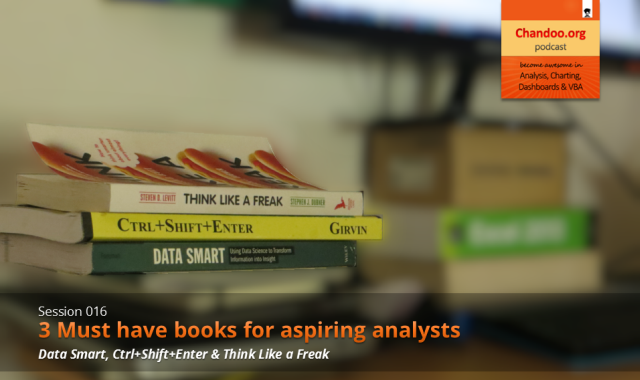

In the 16th session of Chandoo.org podcast, lets review 3 very useful books for aspiring analysts.

What is in this session?

Analytics is an increasingly popular area now. Every day, scores of fresh graduates are reporting to their first day of work as analysts. But to succeed as an analyst?

By learning & practicing of course.

And books play a vital role in opening new pathways for us. They can alter the way we think, shape our behavior and make us awesome, all in a few page turns.

So in this episode, let me share 3 must have books for (aspiring) analysts.

Note: these are not only 3 books you should read. But these 3 are the ones I am reading now and I think they will certainly help you.

Participate in our Analyst Book Giveaway & win:

- You could win a copy of any of these 3 books.

- Last date: 15-AUG-2014 – Friday.

Listen to the podcast to know how to participate.

This podcast is essentially a review of the 3 books – Data Smart by John Foreman, Ctrl+Shift+Enter by Mike Girvin & Think Like a Freak by Steven Levitt & Stephen Dubner.

- Why this book?

- About John Foreman

- Writing style

- Example chapter

- Why this book?

- About Mike Girvin

- Writing style

- Example Array Formulas

- Why a non-Excel book?

- About authors

- Writing style

- Example Chapter on Takeru Kobayashi

Note about the book links: These are affiliate links. It means, when you click on them and purchase a copy of the book, Chandoo.org receives a small commission. I recommend these books because I really enjoy them and I genuinely think they will benefit you. I would have recommended them even when there is no commission involved.

Go ahead and listen to the show

Podcast: Play in new window | Download

Subscribe: RSS

Links & Resources mentioned in this session:

About the bicycle ride

Excelapalooza Excel conference:

Advanced Excel, Dashboards & Power Pivot Masterclass:

More on Array Formulas:

Transcript of this session:

Download this podcast transcript [PDF].

Which is your favorite book for analysts?

I read quite a few books every year. Apart from these three, I also enjoy and recommend these books.

What about you? What is your favorite book for analysts? Please share your thoughts in comments.

Participate in our Analyst Book Giveaway & win:

- You could win a copy of any of these 3 books.

- Last date: 15-AUG-2014 – Friday.

Listen to the podcast to know how to participate.

8 Responses to “Pivot Tables from large data-sets – 5 examples”

Do you have links to any sites that can provide free, large, test data sets. Both large in diversity and large in total number of rows.

Good question Ron. I suggest checking out kaggle.com, data.world or create your own with randbetween(). You can also get a complex business data-set from Microsoft Power BI website. It is contoso retail data.

Hi Chandoo,

I work with large data sets all the time (80-200MB files with 100Ks of rows and 20-40 columns) and I've taken a few steps to reduce the size (20-60MB) so they can better shared and work more quickly. These steps include: creating custom calculations in the pivot instead of having additional data columns, deleting the data tab and saving as an xlsb. I've even tried indexmatch instead of vlookup--although I'm not sure that saved much. Are there any other tricks to further reduce the file size? thanks, Steve

Hi Steve,

Good tips on how to reduce the file size and / or process time. Another thing I would definitely try is to use Data Model to load the data rather than keep it in the file. You would be,

1. connect to source data file thru Power Query

2. filter away any columns / rows that are not needed

3. load the data to model

4. make pivots from it

This would reduce the file size while providing all the answers you need.

Give it a try. See this video for some help - https://www.youtube.com/watch?v=5u7bpysO3FQ

Normally when Excel processes data it utilizes all four cores on a processor. Is it true that Excel reduces to only using two cores When calculating tables? Same issue if there were two cores present, it would reduce to one in a table?

I ask because, I have personally noticed when i use tables the data is much slower than if I would have filtered it. I like tables for obvious reasons when working with datasets. Is this true.

John:

I don't know if it is true that Excel Table processing only uses 2 threads/cores, but it is entirely possible. The program has to be enabled to handle multiple parallel threads. Excel Lists/Tables were added long ago, at a time when 2 processes was a reasonable upper limit. And, it could be that there simply is no way to program table processing to use more than 2 threads at a time...

When I've got a large data set, I will set my Excel priority to High thru Task Manager to allow it to use more available processing. Never use RealTime priority or you're completely locked up until Excel finishes.

That is a good tip Jen...