Peter Bartholomew

Well-Known Member

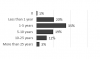

I was recently trying to analyse the results of a survey in which the questions included such as

"What is the typical life of critical spreadsheets within your organisation?"

The table on the left and the option list to the right are returned to the sheet from PowerQuery (the question number being an input parameter) but then I used formulas to return the count of each reply. For some questions the totals did not tally and it turned out that "< 5" was being treated as a numeric criterion rather than a text equality condition. Are there any standard ways of making the COUNTIFS function treat the string as a literal?

p.s. I finished up by editing the data to replace "> 20" by "More than 20 yrs" but I would have preferred to avoid touching the raw data.

"What is the typical life of critical spreadsheets within your organisation?"

The table on the left and the option list to the right are returned to the sheet from PowerQuery (the question number being an input parameter) but then I used formulas to return the count of each reply. For some questions the totals did not tally and it turned out that "< 5" was being treated as a numeric criterion rather than a text equality condition. Are there any standard ways of making the COUNTIFS function treat the string as a literal?

p.s. I finished up by editing the data to replace "> 20" by "More than 20 yrs" but I would have preferred to avoid touching the raw data.