You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Useful PowerQuery tricks: Chihiro's notes

- Thread starter Chihiro

- Start date

Chihiro

Excel Ninja

1. Use of #shared to list all available M functions.

Open blank query and paste in below code in the advanced editor.

Or... in two steps.

Open blank query and enter #shared in formula bar.

Use Record Tools to convert into Table.

Just learned this from Chandoo's sample file. This is very useful as PowerQuery/M is function driven language.

Open blank query and paste in below code in the advanced editor.

Code:

let

Source = Record.ToTable(#shared)

in

SourceOr... in two steps.

Open blank query and enter #shared in formula bar.

Use Record Tools to convert into Table.

Just learned this from Chandoo's sample file. This is very useful as PowerQuery/M is function driven language.

Chihiro

Excel Ninja

2. How to refer to previous row in PowerQuery

Often this type of calculation is performed in DAX, after data is loaded to Data Model. However, there are instances where user does not have access to PowerPivot, and only Get & Transform (PQ).

NOTE:

If the table does not have Index column, add one. This is required as you'll need sequential numbering to refer to previous row.

Formula/Code to refer to previous row value for specific column.

Previous step will be #"Added Index" if you just added index column prior.

Often this type of calculation is performed in DAX, after data is loaded to Data Model. However, there are instances where user does not have access to PowerPivot, and only Get & Transform (PQ).

NOTE:

If the table does not have Index column, add one. This is required as you'll need sequential numbering to refer to previous row.

Formula/Code to refer to previous row value for specific column.

Code:

#"PreviousStep"{[IndexColumnName]-1}[ColumnName]Previous step will be #"Added Index" if you just added index column prior.

Chihiro

Excel Ninja

4. Passing parameter from worksheet to PowerQuery

There are cases where you want to allow user to set parameter without going into PowerQuery Editor. A single cell named range can be used for this purpose.

Set up single cell with named range (ex. vVal = Sheet1!$A$1)

Then in advanced editor enter following:

Now, you can use mVal in subsequent steps in PowerQuery.

Note:

While I find above easiest to use. You can also use Table and other methods.

Ex. To refer to single cell in a table.

Where {0} indicates first row of data range.

For referencing worksheet, you can't use Excel.CurrentWorkbook method. You will need to use Excel.Workbook and specify full path of the file. Since, workbook location may change, this method isn't recommended.

To make path dynamic you can use named range method explained above. Combining it with Excel formula to get file path.

Excel formula for path (excluding file name):

There are cases where you want to allow user to set parameter without going into PowerQuery Editor. A single cell named range can be used for this purpose.

Set up single cell with named range (ex. vVal = Sheet1!$A$1)

Then in advanced editor enter following:

Code:

mVal = Excel.CurrentWorkbook(){[Name="vVal"]}[Content]{0}[Column1],Now, you can use mVal in subsequent steps in PowerQuery.

Note:

While I find above easiest to use. You can also use Table and other methods.

Ex. To refer to single cell in a table.

Code:

= Excel.CurrentWorkbook(){[Name="TableName"]}[Content]{0}[ColumnName]Where {0} indicates first row of data range.

For referencing worksheet, you can't use Excel.CurrentWorkbook method. You will need to use Excel.Workbook and specify full path of the file. Since, workbook location may change, this method isn't recommended.

To make path dynamic you can use named range method explained above. Combining it with Excel formula to get file path.

Excel formula for path (excluding file name):

Code:

=LEFT(CELL("filename"),FIND("[",CELL("filename"),1)-1)

Last edited:

Chihiro

Excel Ninja

5. Using variable in Native Query

In most instances, it is easy enough to filter data in query editor, but there are instances where you want to limit data size that's being brought in. I recommend doing it on SQL side by creating View (or prameterized query) . But this isn't feasible when you don't have permission in the database.

When passing parameter to query, you should always take care to convert variable to text type. This is especially important for numeric values. Since, & operator (concatenate) can't be used on non-text data types.

Sample native query to SQL server.

This will be shown in Advanced query as...

Note that #(lf) represents new line.

Refer to Post #5 above for how to pass variable from worksheet to PowerQuery.

Ex: Where named range vVal holds date value

Text.From is used to convert to text type.

You can then edit query to...

One drawback to this method is that editing source by database query window will not be available and you must edit query directly in formula bar or in advanced editor.

Note: You may encounter security level mismatch when variable is used in native query. If that happens, go to "Data source settings" in query editor, and set the database's security and current workbook's security to same level.

In most instances, it is easy enough to filter data in query editor, but there are instances where you want to limit data size that's being brought in. I recommend doing it on SQL side by creating View (or prameterized query) . But this isn't feasible when you don't have permission in the database.

When passing parameter to query, you should always take care to convert variable to text type. This is especially important for numeric values. Since, & operator (concatenate) can't be used on non-text data types.

Sample native query to SQL server.

Code:

SELECT *

FROM TableName as t1

WHERE t1.Date >= '8/1/2017'This will be shown in Advanced query as...

Code:

=Sql.Database("Server:Port", "Dbname", [Query="SELECT * #(lf)FROM TableName as t1 #(lf)WHERE t1.Date >= '8/1/2017'"])Refer to Post #5 above for how to pass variable from worksheet to PowerQuery.

Ex: Where named range vVal holds date value

Code:

mVal = Text.From(Excel.CurrentWorkbook(){[Name="vVal"]}[Content]{0}[Column1]),Text.From is used to convert to text type.

You can then edit query to...

Code:

=Sql.Database("Server:Port", "Dbname", [Query="SELECT * #(lf)FROM TableName as t1 #(lf)WHERE t1.Date >= '" & mVal & "'"])One drawback to this method is that editing source by database query window will not be available and you must edit query directly in formula bar or in advanced editor.

Note: You may encounter security level mismatch when variable is used in native query. If that happens, go to "Data source settings" in query editor, and set the database's security and current workbook's security to same level.

Last edited:

Chihiro

Excel Ninja

6. Check if value is number

Normally, in PowerQuery, you'd keep column data type consistent, be it Numeric; Text or some other type.

However, in some cases, when the data is brought in to PowerQuery, it isn't of consistent type. In order to do transformation sometimes it is necessary to identify what type of data is contained in each row of a column. Following formula can be used to identify numeric data.

This will return True for numeric and False for other data types.

Alternately you can use following to return actual numeric value.

This will return actual numeric value from column row if numeric and null if not.

Normally, in PowerQuery, you'd keep column data type consistent, be it Numeric; Text or some other type.

However, in some cases, when the data is brought in to PowerQuery, it isn't of consistent type. In order to do transformation sometimes it is necessary to identify what type of data is contained in each row of a column. Following formula can be used to identify numeric data.

Code:

= Value.Is(Value.FromText([ColumnName]), type number)This will return True for numeric and False for other data types.

Alternately you can use following to return actual numeric value.

Code:

= try Number.From([ColumnName]) otherwise nullThis will return actual numeric value from column row if numeric and null if not.

Chihiro

Excel Ninja

7. PowerTrim

Unlike Excel's Trim function Text.Trim in PQ does not trim mid string spaces. I used to use Text.Replace with double spaces. But found this great function by Ken Puls.

This is really useful custom function to keep in your bag of tricks and I always have it handy in my NotePad ++ Library of code/function snippets.

Below is link to Ken's article on the function.

https://www.excelguru.ca/blog/2015/10/08/clean-whitespace-in-powerquery/

Unlike Excel's Trim function Text.Trim in PQ does not trim mid string spaces. I used to use Text.Replace with double spaces. But found this great function by Ken Puls.

Code:

(text as text, optional char_to_trim as text) =>

let

char = if char_to_trim = null then " " else char_to_trim,

split = Text.Split(text, char),

removeblanks = List.Select(split, each _ <> ""),

result=Text.Combine(removeblanks, char)

in

resultThis is really useful custom function to keep in your bag of tricks and I always have it handy in my NotePad ++ Library of code/function snippets.

Below is link to Ken's article on the function.

https://www.excelguru.ca/blog/2015/10/08/clean-whitespace-in-powerquery/

Chihiro

Excel Ninja

8. Max Date across Multiple Column

Recently I was asked to help client analyze customer purchase data. To obtain average duration from previous purchase made, for list of customers that made purchase in a given month. This is normally very easy to do with flat table.

However, client had data stored in View in database and had 3 columns tracking previous purchase made using different payment method. These columns had null value when there is no record.

Typically this can be handled using SQL Unpivot (see link below for explanation).

https://blogs.msdn.microsoft.com/sa...-finding-the-max-value-from-multiple-columns/

But client was using SQL Server 2003... which doesn't support Unpivot function and also eliminated several other methods I typically use (Cross Apply, NULLIF).

This had me scratching my head for a bit. Then I remembered that PowerQuery had unpivot tool.

Process:

1. Data was brought in from SQL without any transformation or aggregation, as there was need to validate process and compare against result obtained using SQL Query (I imported table into SQL2012 for this purpose and used previously mentioned Unpivot combined with Row_Number function).

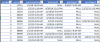

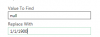

2. Once data is brought into PowerQuery, select the 3 prevType columns (i.e. date columns) and replace null values with some non-existent date value (typically 1/1/1900). Without this step, when all 3 columns have null, that line will disappear from result.

Note: Make sure you use all lower case for null.

3. With same 3 columns selected, unpivot the columns.

4. Select all columns except the new attribute column and value column and Group by, using Max of Value column as aggregation.

5. Replace 1/1/1900 with null. Done

BONUS: Duration in days.

Recently I was asked to help client analyze customer purchase data. To obtain average duration from previous purchase made, for list of customers that made purchase in a given month. This is normally very easy to do with flat table.

However, client had data stored in View in database and had 3 columns tracking previous purchase made using different payment method. These columns had null value when there is no record.

Typically this can be handled using SQL Unpivot (see link below for explanation).

https://blogs.msdn.microsoft.com/sa...-finding-the-max-value-from-multiple-columns/

But client was using SQL Server 2003... which doesn't support Unpivot function and also eliminated several other methods I typically use (Cross Apply, NULLIF).

This had me scratching my head for a bit. Then I remembered that PowerQuery had unpivot tool.

Process:

1. Data was brought in from SQL without any transformation or aggregation, as there was need to validate process and compare against result obtained using SQL Query (I imported table into SQL2012 for this purpose and used previously mentioned Unpivot combined with Row_Number function).

2. Once data is brought into PowerQuery, select the 3 prevType columns (i.e. date columns) and replace null values with some non-existent date value (typically 1/1/1900). Without this step, when all 3 columns have null, that line will disappear from result.

Note: Make sure you use all lower case for null.

3. With same 3 columns selected, unpivot the columns.

4. Select all columns except the new attribute column and value column and Group by, using Max of Value column as aggregation.

5. Replace 1/1/1900 with null. Done

BONUS: Duration in days.

Code:

= if [LastPrevPurchase] = null then null else Duration.Days(Duration.From([purchaseTime]-[LastPrevPurchase]))GraH - Guido

Well-Known Member

Hi Chihiro, I've stumbled across a video by Oz du Soleil revealing a vlookup option true with the use of the UI only. So no M-code involved. The option used is an append query and then some clever sorting and a fill down.

I believe it has its use.

http://ozdusoleil.com/2018/03/01/vlookup-true-equivalent-in-power-query-with-dynamic-tiers/

I believe it has its use.

http://ozdusoleil.com/2018/03/01/vlookup-true-equivalent-in-power-query-with-dynamic-tiers/

Chihiro

Excel Ninja

9. Wildcard search (string) in PowerQuery

In simplest form comparing string to string.

It will return True if substring is within string.

However, in many cases, you'd want to ignore case sensitivity. In which case you'd use 3rd argument for Text.Contains.

But, Text.Contains does not handle multiple substring. You could write multiple Text.Contains function, but that's tedious.

This is where Splitter.SplitTextByAnyDelimiter comes in handy. This function can accept list of delimiters to split string. Instead of just one delimiter (SplitTextByDelimiter).

As Splitter.SplitTextByxxx will always produce 1 item in list (i.e. delimiter isn't present in string = 1, meaning string isn't split). You can use List.Count on the result to test if substring is contained.

Ex: From following check if field contains "cat" or "dog", case sensitive.

If you want to exclude string containing "cat" or "dog"...

There is no internal argument to make it case insensitive, so you'd need to make [Text] all lower case etc to accommodate for cases (or the other way around).

Since list can be a table column. If you have another table with list of substrings you could use that in place of hard coded list... see attached for how this is done.

In simplest form comparing string to string.

Code:

= Text.Contains("String","Substring")However, in many cases, you'd want to ignore case sensitivity. In which case you'd use 3rd argument for Text.Contains.

Code:

= Text.Contains("String","Substring", Comparer.OrdinalIgnoreCase)But, Text.Contains does not handle multiple substring. You could write multiple Text.Contains function, but that's tedious.

This is where Splitter.SplitTextByAnyDelimiter comes in handy. This function can accept list of delimiters to split string. Instead of just one delimiter (SplitTextByDelimiter).

As Splitter.SplitTextByxxx will always produce 1 item in list (i.e. delimiter isn't present in string = 1, meaning string isn't split). You can use List.Count on the result to test if substring is contained.

Ex: From following check if field contains "cat" or "dog", case sensitive.

Code:

= List.Count(Splitter.SplitTextByAnyDelimiter({"dog","cat"})([Text]))>1If you want to exclude string containing "cat" or "dog"...

Code:

= List.Count(Splitter.SplitTextByAnyDelimiter({"dog","cat"})([Text]))=1There is no internal argument to make it case insensitive, so you'd need to make [Text] all lower case etc to accommodate for cases (or the other way around).

Since list can be a table column. If you have another table with list of substrings you could use that in place of hard coded list... see attached for how this is done.

Attachments

Chihiro

Excel Ninja

10. Using SQL query on Excel Workbook

PowerQuery has built in "From Workbook" query to bring in data from Excel file. However, there are times when you wish to transform data before it's brought in via PQ.

You can use Odbc.Query() for this purpose.

https://msdn.microsoft.com/en-us/query-bi/m/odbc-query

Sample connection string:

dbq - The file location including file name.

defaultdir - Folder containing the file

MaxScanRows - Number of rows used to determine column data type.

This is critical parameter, especially if there is any column with string and numeric values intermixed. It can be set between 0 to 16. 0 will scan entire column before choosing type and can slow down query. If there are more numeric cells in row range than string, it will cause string values to be null. In those cases, first sort the source file to have string value at first data row, and use MaxScanRows = 1. This will ensure no values are missed in the query.

Sample source formula.

Sheet will be treated as table and it's name should always suffixed by "$". Columns and tables should be nested in [].

There are many SQL statement that are not supported or have limitations in ODBC drivers (Ex. CONVERT() can't be used). Have a read of link below for more details on ODBC.

https://docs.microsoft.com/en-us/sq...desktop-database-drivers?view=sql-server-2017

PowerQuery has built in "From Workbook" query to bring in data from Excel file. However, there are times when you wish to transform data before it's brought in via PQ.

You can use Odbc.Query() for this purpose.

https://msdn.microsoft.com/en-us/query-bi/m/odbc-query

Sample connection string:

Code:

"dbq=C:\USERS\USERNAME\FOLDER\Receipt dump.xlsx;defaultdir=C:\USERS\USERNAME\FOLDER;driverid=1046;maxbuffersize=2048;MaxScanRows=1;pagetimeout=5;dsn=Excel Files"dbq - The file location including file name.

defaultdir - Folder containing the file

MaxScanRows - Number of rows used to determine column data type.

This is critical parameter, especially if there is any column with string and numeric values intermixed. It can be set between 0 to 16. 0 will scan entire column before choosing type and can slow down query. If there are more numeric cells in row range than string, it will cause string values to be null. In those cases, first sort the source file to have string value at first data row, and use MaxScanRows = 1. This will ensure no values are missed in the query.

Sample source formula.

Code:

= Odbc.Query("dbq=C:\USERS\USERNAME\FOLDER\Receipt dump.xlsx;defaultdir=C:\USERS\USERNAME\FOLDER;driverid=1046;maxbuffersize=2048;MaxScanRows=1;pagetimeout=5;dsn=Excel Files", "Select Distinct * From [Sheet1$]")Sheet will be treated as table and it's name should always suffixed by "$". Columns and tables should be nested in [].

There are many SQL statement that are not supported or have limitations in ODBC drivers (Ex. CONVERT() can't be used). Have a read of link below for more details on ODBC.

https://docs.microsoft.com/en-us/sq...desktop-database-drivers?view=sql-server-2017

Chihiro

Excel Ninja

11. Dynamic Merge (using List.Accumulate)

Typically, you'd not merge files (join), but append files (union) to query multiple source into single table in PowerQuery. However, there are occasionally need to merge multiple source into single table.

PowerQuery has native functionality to append multiple files dynamically. However, lacks functionality to merge multiple files dynamically.

Following is method to accomplish this through use of List.Accumulate.

1. Query folder and use append to generate base transformation steps.

2. Remove queries contained in "Other Queries" group.

3. Make sure first row of the sample file is "NOT" promoted as header. This is important, as you'd want fixed column name for shared first column.

4. Add custom function "fMerge". Number.ToText is used to dynamically change column name here, though you can use other logic, such as List.Generate, if so desired.

5. Then add CSV query to step through each file in folder.

Key here, is List.Accumulate(), it is used to perform recursive join by passing result of fMerge on to subsequent iteration as "state".

Note: fPath is variable used to obtain target folder via Named range stored in sample file. See attached sample for more detail.

You can read more about List.Accumulate in links below.

https://msdn.microsoft.com/en-us/query-bi/m/list-accumulate

http://radacad.com/list-accumulate-hidden-gem-of-power-query-list-functions-in-power-bi

https://datachant.com/2016/06/02/power-query-list-accumulate-unleashed/

Typically, you'd not merge files (join), but append files (union) to query multiple source into single table in PowerQuery. However, there are occasionally need to merge multiple source into single table.

PowerQuery has native functionality to append multiple files dynamically. However, lacks functionality to merge multiple files dynamically.

Following is method to accomplish this through use of List.Accumulate.

1. Query folder and use append to generate base transformation steps.

2. Remove queries contained in "Other Queries" group.

3. Make sure first row of the sample file is "NOT" promoted as header. This is important, as you'd want fixed column name for shared first column.

4. Add custom function "fMerge". Number.ToText is used to dynamically change column name here, though you can use other logic, such as List.Generate, if so desired.

Code:

(tbla as table, tblb as table) as table =>

let

Source = Table.NestedJoin(tbla,{"Column1"},tblb,{"Column1"},"Joined",JoinKind.LeftOuter),

ColToExp = List.Skip(Table.ColumnNames(tblb),1),

Expand = Table.ExpandTableColumn(Source, "Joined", ColToExp, {ColToExp{0} & Number.ToText(Table.ColumnCount(Source))})

in

Expand

Code:

let

fPath = Excel.CurrentWorkbook(){[Name="vPath"]}[Content]{0}[Column1],

Source = Folder.Files(fPath),

#"Invoke Custom Function1" = Table.AddColumn(Source, "Transform File from CSV", each #"Transform File from CSV"([Content])),

#"Renamed Columns1" = Table.RenameColumns(#"Invoke Custom Function1", {"Name", "Source.Name"}),

#"Removed Other Columns1" = Table.SelectColumns(#"Renamed Columns1", {"Source.Name","Transform File from CSV"}),

JoinTbl = List.Accumulate(

List.Skip(#"Removed Other Columns1"[Transform File from CSV]),

#"Removed Other Columns1"[Transform File from CSV]{0},

(JoinedTable,CurrentTable) => fMerge(JoinedTable, CurrentTable)

)

in

JoinTblKey here, is List.Accumulate(), it is used to perform recursive join by passing result of fMerge on to subsequent iteration as "state".

Note: fPath is variable used to obtain target folder via Named range stored in sample file. See attached sample for more detail.

You can read more about List.Accumulate in links below.

https://msdn.microsoft.com/en-us/query-bi/m/list-accumulate

http://radacad.com/list-accumulate-hidden-gem-of-power-query-list-functions-in-power-bi

https://datachant.com/2016/06/02/power-query-list-accumulate-unleashed/

Attachments

Last edited:

Chihiro

Excel Ninja

12. Update to '8. Max Date across Multiple Column'

Rather than using Unpivot operation, you can use List.Max function. You just have to make sure to write custom column formula with list syntax. Each column value being list item.

Ex: Using example from post #9. Assuming "NULL" string are replaced with actual null value (i.e. "null").

Rather than using Unpivot operation, you can use List.Max function. You just have to make sure to write custom column formula with list syntax. Each column value being list item.

Ex: Using example from post #9. Assuming "NULL" string are replaced with actual null value (i.e. "null").

Code:

=List.Max({[prevType1],[prevType2],[prevType3]})Chihiro

Excel Ninja

13. Clean/filter out all special characters from entire table in one shot

You can use Text.Replace() to remove special characters, but this becomes tedious and unreliable when there are unknown number of special characters, especially when dealing with 3rd party export.

Doing reverse operation (i.e. extracting characters you wish to retain) is more robust.

Ex: To retain only alphanumeric characters along with space character.

However, as is, this requires function to be repeated for each column of the table. By combining this with List.Transform, it can be done for every column generated by Table.ColumnNames. You can further customize it if you know the columns that should be excluded from this operation by using List.Select etc.

Ex:

You can use Text.Replace() to remove special characters, but this becomes tedious and unreliable when there are unknown number of special characters, especially when dealing with 3rd party export.

Doing reverse operation (i.e. extracting characters you wish to retain) is more robust.

Ex: To retain only alphanumeric characters along with space character.

Code:

= Table.TransformColumns(#"Changed Type", {"Column1", each Text.Select(_,{"a".."z","A".."Z","0".."9"," "})})However, as is, this requires function to be repeated for each column of the table. By combining this with List.Transform, it can be done for every column generated by Table.ColumnNames. You can further customize it if you know the columns that should be excluded from this operation by using List.Select etc.

Ex:

Code:

= Table.TransformColumns(#"Changed Type", List.Transform(Table.ColumnNames(#"Changed Type"), each {_, each Text.Select(_, {"a".."z","A".."Z","0".."9"," "}), type text}))Chihiro

Excel Ninja

14. Various Table/List manipulation

Recently had to build process to import customer generated PDF and transform it into format that can be imported into our project management software. While I can't share entire process, here are some useful operations to sanitize PDF.

Check if a column in [Data] of Pdf.Table() has specific text.

Add custom column with following.

Alternately if you want to check if specific text appears anywhere in [Data]

Above is useful in filtering Pdf.Table() to specific rows which you need for further processing.

Transform all columns to {type text}.

Function to find first column in given row where field value is numeric. It returns null if no numeric field found in row.

Useful when columns are jagged and you need to find position of column to concatenate fields based on position. You can change above to find position of column that start with specific text etc.

Recently had to build process to import customer generated PDF and transform it into format that can be imported into our project management software. While I can't share entire process, here are some useful operations to sanitize PDF.

Check if a column in [Data] of Pdf.Table() has specific text.

Add custom column with following.

Code:

=List.Contains([Data][Column1],"SomeText")Alternately if you want to check if specific text appears anywhere in [Data]

Code:

=Text.Contains(Text.Combine(Table.ToList([Data], Combiner.CombineTextByDelimiter("", QuoteStyle.None)),""),"SomeText")Transform all columns to {type text}.

Code:

=Table.TransformColumnTypes(Source, List.Transform(Table.ColumnNames(Source), each {_, type text}))Function to find first column in given row where field value is numeric. It returns null if no numeric field found in row.

Code:

(tbl as table, mRow as number) =>

let

lVal = Record.ToList(tbl{mRow}),

firstNum = List.Select(lVal, each Value.Is(Value.FromText(_), type number)){0},

colPos = try List.PositionOf(lVal, firstNum) otherwise null

in

colPos